University of Science and Technology Beijing

University of Science and Technology Beijing

University of Science and Technology Beijing

University of Science and Technology Beijing

TCSVT 2024

*First author, †Corresponding author

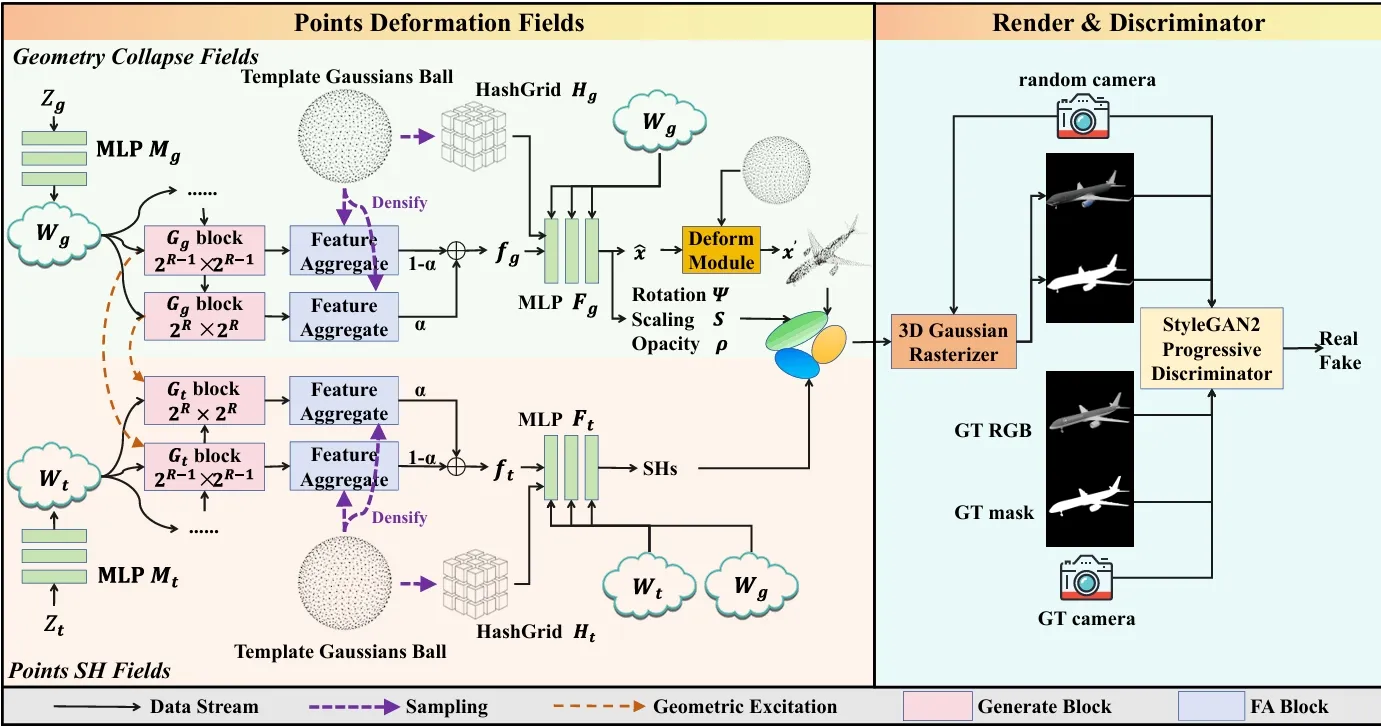

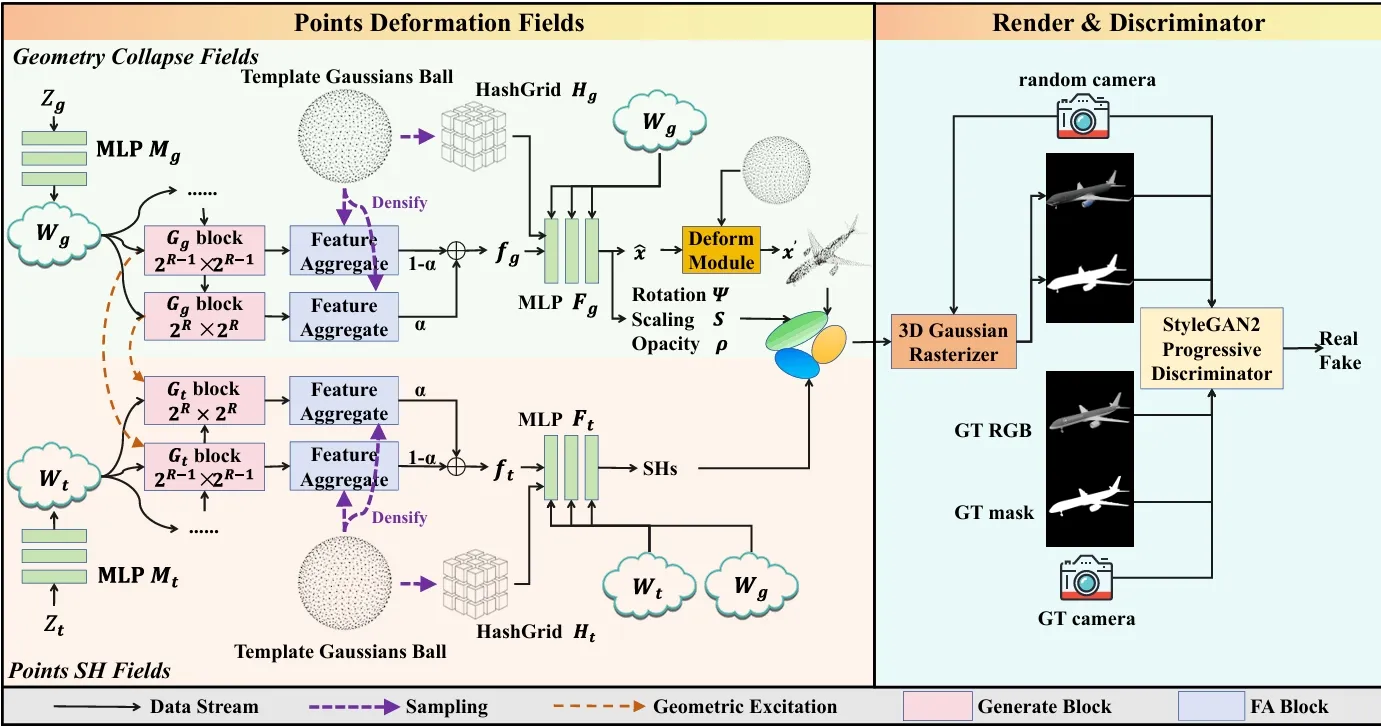

The 3D Gaussian Splatting method has recently shown significant advancements in rendering speed and scene composition quality, enhancing its industrial applications and boosting the demand for 3D Gaussian asset generation. However, existing mature 3D generation technologies predominantly rely on implicit representations, which often struggle to balance geometric quality with editability. The production of 3D Gaussian assets generally involves diffusion models that require a dual-stage process of reconstruction and generation, resulting in substantial training and inference costs. To overcome these challenges, we introduce GET3DGS, an innovative approach that combines 3D-aware GANs with 3D Gaussian Splatting representations. This method facilitates the manipulation of the physical attributes of 3D Gaussians, such as geometry and texture, via point deformation fields. Offering faster inference speeds and end-to-end training capabilities, our model outperforms existing diffusion model-based methods. By deriving high-quality Gaussian point cloud geometric representations from 2D images, our approach reduces material accumulation costs and produces data compatible with 3D Gaussian rendering engines. We have evaluated the generative performance of our model on ShapeNet and OmniObject3D and demonstrate competitive results in terms of image and geometric quality relative to previous methods.

Use the figure component to display images, videos, equations, or any other element, with an optional caption.

The geometry collapse fields are designed to map a template point cloud to a specific distribution, addressing the challenges of large solution spaces and unordered point cloud data. By introducing a strategy of density correction followed by radial collapse, the approach optimizes the solution space and point cloud density. This involves computing radial and rotational offsets in spherical coordinates, with the deformation function segmented into radial and rotational components. The method employs a feature aggregate module and a bi-plane structure for efficient feature utilization, enhancing network stability and rendering quality. The final representation integrates 3D Gaussian attributes, using activation functions to constrain physical attributes and prevent gradient instability.

The points SH fields are responsible for generating the texture of 3D Gaussians, utilizing a structure similar to the geometry collapse fields. Texture noise is encoded into a styled vector and processed through a textural generator block, with SH features generated on two planes to aid in shape coloring. Geometric excitation is applied by injecting intermediate features from the geometry collapse fields into the points SH fields, modulating the texture to adapt to the geometric distribution. This modulation ensures that texture features are influenced appropriately by geometry without being overwhelmed. The geometry and texture stylized vectors are concatenated to guide the MLP decoder in outputting texture attributes. The same sampling and aggregation methods used in geometry collapse fields are applied, allowing the learning of SH coefficients for effective texture representation.

Progressive densification training is a strategy designed to address the complexity of learning geometry and color mappings for large point clouds, especially when using GANs. This approach combines progressive generator resolution with the splitting of 3D Gaussians. Initially, a base resolution is set, and as training progresses, the resolution is doubled, allowing the model to densify by splitting coordinate positions. This method leverages the learnable rotation and scaling capabilities of Gaussian point clouds, ensuring training stability without reinitialization. At lower resolutions, fewer 3D Gaussians approximate the object’s basic structure and color, while high-resolution training refines these details. The process is mathematically described by progressively blending features from current and previous resolutions. Additionally, a Gaussian template is used to ensure uniform point distribution across a sphere’s surface, calculated using spherical coordinates. This template adapts to different resolutions by increasing point density, as detailed in the experimental configurations.

| Dataset | Type | Model | FID↓ | KID↓(‰) | COV↑(%) | MMD↓ |

|---|---|---|---|---|---|---|

| Car | 3D | PointFlow | - | - | 60.57 | 0.85 |

| 3D | DiT-3D | - | - | 31.70 | 2.98 | |

| 3D | DPM | - | - | 60.24 | 0.95 | |

| 3D | PSF | - | - | 70.73 | 1.03 | |

| 3D | MeshDiffusion | - | - | 66.55 | 1.12 | |

| ------- | ------- | ------ | ---------- | --------- | ------- | |

| 2D→3D | GET3D | 13.19 | 5.66 | 44.13 | 0.80 | |

| 2D→3D | DiffTF | 73.21 | 58.72 | 49.53 | 1.36 | |

| 2D→3D | GaussianCube | 16.77 | 5.22 | 61.48 | 0.90 | |

| 2D→3D | GET3DGS(ours) | 11.03 | 4.17 | 66.97 | 1.04 | |

| --------- | ------ | ------- | ------ | ---------- | --------- | ------- |

| Chair | 3D | PointFlow | - | - | 72.94 | 2.67 |

| 3D | DiT-3D | - | - | 76.83 | 2.82 | |

| 3D | DPM | - | - | 81.73 | 2.87 | |

| 3D | PSF | - | - | 81.92 | 2.65 | |

| 3D | MeshDiffusion | - | - | 82.26 | 2.65 | |

| ------- | ------- | ------ | ---------- | --------- | ------- | |

| 2D→3D | GET3D | 30.16 | 18.32 | 69.92 | 3.90 | |

| 2D→3D | DiffTF | 80.56 | 65.34 | 76.22 | 3.64 | |

| 2D→3D | GaussianCube | 32.17 | 15.06 | 63.39 | 3.60 | |

| 2D→3D | GET3DGS(ours) | 24.41 | 13.68 | 75.44 | 3.90 | |

| --------- | ------ | ------- | ------ | ---------- | --------- | ------- |

| MotorBike | 3D | PointFlow | - | - | 64.57 | 0.88 |

| 3D | DiT-3D | - | - | 75.34 | 0.81 | |

| 3D | DPM | - | - | 80.67 | 0.99 | |

| 3D | PSF | - | - | 74.46 | 1.25 | |

| 3D | MeshDiffusion | - | - | 89.04 | 1.08 | |

| ------- | ------- | ------ | ---------- | --------- | ------- | |

| 2D→3D | GET3D | 74.04 | 45.66 | 65.75 | 1.74 | |

| 2D→3D | DiffTF | 102.46 | 88.95 | 41.02 | 6.05 | |

| 2D→3D | GaussianCube | 58.95 | 30.03 | 68.60 | 0.95 | |

| 2D→3D | GET3DGS(ours) | 61.15 | 33.98 | 81.25 | 0.92 | |

| --------- | ------ | ------- | ------ | ---------- | --------- | ------- |

| OmniObject3D | 2D→3D | GET3D | 28.92 | 12.01 | 31.21 | 6.34 |

| 2D→3D | DiffTF | 93.85 | 51.88 | 34.59 | 6.05 | |

| 2D→3D | GaussianCube | 26.45 | 11.15 | 35.10 | 6.26 | |

| 2D→3D | GET3DGS(ours) | 26.26 | 11.13 | 32.59 | 6.46 |

Table: Evaluation of 2D image quality and 3D point cloud geometry quality under 512×512 resolution. The underlined values represent better geometric performance of the 3D baseline.

@ARTICLE{10777594,

author={Yu, Haochen and Gong, Weixi and Chen, Jiansheng and Ma, Huimin},

journal={IEEE Transactions on Circuits and Systems for Video Technology},

title={GET3DGS: Generate 3D Gaussians Based on Points Deformation Fields},

year={2024},

volume={},

number={},

pages={1-1},

keywords={Three-dimensional displays;Solid modeling;Training;Rendering (computer graphics);Deformation;Point cloud compression;Geometry;Image reconstruction;Shape;Image color analysis;3D Gaussian Splatting;3D generation;Differentiable rendering},

doi={10.1109/TCSVT.2024.3511342}}